Here is a little JavaScript program I wrote that allows one to play with potential flow by inserting sources in a wind tunnel. Potential flow is an idealized flow characterized by irrotationality. The particular form used here has the added characterizations of being inviscid and incompressible, and satisfies Laplace's Equation for a velocity 'potential':

The velocities are recovered by:

Second-order-accurate finite differencing schemes are used to recover the velocities.

I will continuously be working on this, so this is by no means the final version. Currently I aim to bring the following:

-Doublets

-Vortices

-Making it into a game

It is colored by potential. Click on it!

Discrete Resolution:

Pixel Dimensions:

Showing posts with label CFD (Computational Fluid Dynamics). Show all posts

Showing posts with label CFD (Computational Fluid Dynamics). Show all posts

Saturday, May 10, 2014

Sunday, March 2, 2014

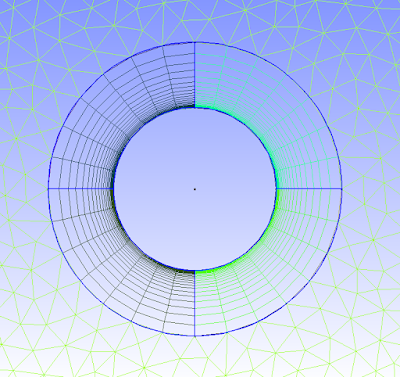

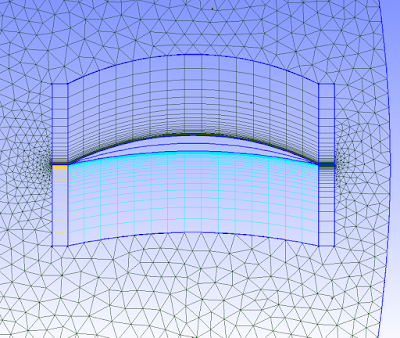

Cyclorotor CFD Simulations in OpenFOAM

A few months ago, I became interested in the cyclorotor concept. This concept goes by many names, including cyclogyro and cyclocopter. The cyclorotor concept has been around since the early 1900's, and has recently had revived interest. I think this is due to modern materials and controls making the concept more feasible. Some universities I have seen in research papers include the University of Maryland and Seoul National University. To see one in action, check out this video:

Some more solver details:

Although there is an interesting track mechanism that I have seen in research reports that can alleviate forward flight problems and greater blade pitch control in general, but in my opinion it is still complicated and sensitive to geometry. The mechanism involves wheels on the tips of the blades that ride on the inside of a specially-shaped track. The wheel stays on the track by the inherent centrifugal forces on the blades. Control comes from translating the track in the plane of rotation, as well as the in the direction of the rotation vector. The track changes shape smoothly along the direction of the rotation vector so as to get the desired pitches.

I ran a few simulations in OpenFOAM to explore the capabilities of the cyclorotor. Here, I will describe validation runs that show that OpenFOAM can accurately predict cyclorotor performance. I used the pimpleDyMFoam solver in PISO mode (no SIMPLE iterations) and a Spalart-Allmaras RANS turbulence model with curvature correction.

Some more solver details:

-Max Courant number of 2

-First order in space and time

-Upwind spatial discretization scheme

OpenFOAM cannot run cyclorotor simulations 'out of the box'. It requires some custom solidBodyMotionFunctions, because the blades rotate in a certain way about its own pitch center, while being displaced by rotating around the rotor center. I have uploaded the code I used to Github here:

https://github.com/lordvon/OpenFOAM_Additions/tree/master/cycloRamp

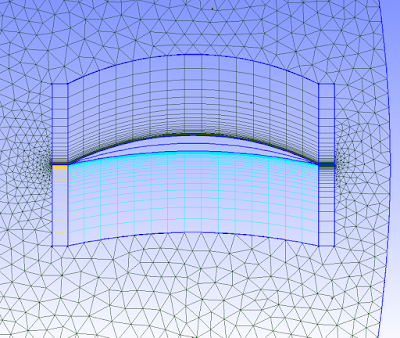

The mesh I used consisted of a square far-field boundary with inflation layers near the shaft and blades. I meshed it in Gmsh.

The following videos show the velocity magnitude and pressure fields.

The following shows experimental results from a Seoul National University research paper, and my OpenFOAM simulations. OpenFOAM results match quite closely. The OpenFOAM simulations were performed in 2D.

Ultimately, I found the cyclorotor concept not very practical (at the time of writing this). These are the reasons:

-Complicated mechanisms

-Forward flight performance not favorable

-Structures. Centrifugal loads are very high, and the blades have to resist this in a weak orientation (i.e. tangential vs radial chord line alignment).

Although there is an interesting track mechanism that I have seen in research reports that can alleviate forward flight problems and greater blade pitch control in general, but in my opinion it is still complicated and sensitive to geometry. The mechanism involves wheels on the tips of the blades that ride on the inside of a specially-shaped track. The wheel stays on the track by the inherent centrifugal forces on the blades. Control comes from translating the track in the plane of rotation, as well as the in the direction of the rotation vector. The track changes shape smoothly along the direction of the rotation vector so as to get the desired pitches.

In my opinion the most significant advantage of the cyclorotor is thrust direction freedom.

At the request of Francois, you can find the mesh files here:

http://www.cfd-online.com/Forums/openfoam-programming-development/132068-cyclorotor-simulations.html#post482000

At the request of Thomas, I have posted the case and mesh files here:

http://www.cfd-online.com/Forums/openfoam/132562-help-compiling-code-newbie.html#post483542

At the request of Francois, you can find the mesh files here:

http://www.cfd-online.com/Forums/openfoam-programming-development/132068-cyclorotor-simulations.html#post482000

At the request of Thomas, I have posted the case and mesh files here:

http://www.cfd-online.com/Forums/openfoam/132562-help-compiling-code-newbie.html#post483542

Saturday, December 21, 2013

Github Code

I just wanted to share and link some code that I had uploaded to Github a while ago: https://github.com/lordvon?tab=repositories

Mainly there are two things:

-OpenFOAM Code.

-Incompressible flow solver, and related utilities.

The incompressible flow solver is based on J.B. Perot's Exact Fractional Step Method. I wanted to use this instead of OpenFOAM's PISO for transient flows. Theoretically and practically in my opinion the Exact Fractional Step Method is superior; you can find more information from Perot directly with a simple Google search. From scratch, I coded it in C (with the awesome tool VALGRIND) and got to the point of being able to simulate a 2D box in a wind tunnel using an unstructured-multiblock grid. However, the additional features I required (3D, sliding mesh interface) proved too much in terms of time and effort (especially debugging!), so I reverted back to OpenFOAM.

The OpenFOAM modules are very useful and I use them heavily today. They include mesh motion functions for the AMI moving region and turbulence models. Take a look at them if you are interested.

-Nested rotating interfaces: regions rotating and translating inside of another rotating region, i.e. cyclorotor simulations.

-Ramped rotating motion: linear speedup of rotational velocity, to allow for Courant numbers not affected too much by high-velocity transients. This is supposed to allow for faster resolution of low-frequency starting phenomenon in transient simulations.

-Proper and Official Spalart-Allmaras Turbulence Model: From a research publication written by Spalart himself in 2013. He acknowledges the confusion and variation of in the popular RANS model and establishes the correct and most up-to-date way to implement it. It differs quite a bit from the OpenFOAM standard S-A model.

-Spalart-Allmaras with ROTATION/CURVATURE CORRECTION: Detailed in Zhang and Yang (2013), this model utilizes the Richardson number to sensitize the S-A to rotation and curvature (R/C), while bypassing the significant additional computation required by standard S-A R/C correction models. The more efficient model is shown in the paper to provide almost identical results to the traditional model in several test cases.

-And others... take a look!

Mainly there are two things:

-OpenFOAM Code.

-Incompressible flow solver, and related utilities.

The incompressible flow solver is based on J.B. Perot's Exact Fractional Step Method. I wanted to use this instead of OpenFOAM's PISO for transient flows. Theoretically and practically in my opinion the Exact Fractional Step Method is superior; you can find more information from Perot directly with a simple Google search. From scratch, I coded it in C (with the awesome tool VALGRIND) and got to the point of being able to simulate a 2D box in a wind tunnel using an unstructured-multiblock grid. However, the additional features I required (3D, sliding mesh interface) proved too much in terms of time and effort (especially debugging!), so I reverted back to OpenFOAM.

The OpenFOAM modules are very useful and I use them heavily today. They include mesh motion functions for the AMI moving region and turbulence models. Take a look at them if you are interested.

-Nested rotating interfaces: regions rotating and translating inside of another rotating region, i.e. cyclorotor simulations.

-Ramped rotating motion: linear speedup of rotational velocity, to allow for Courant numbers not affected too much by high-velocity transients. This is supposed to allow for faster resolution of low-frequency starting phenomenon in transient simulations.

-Proper and Official Spalart-Allmaras Turbulence Model: From a research publication written by Spalart himself in 2013. He acknowledges the confusion and variation of in the popular RANS model and establishes the correct and most up-to-date way to implement it. It differs quite a bit from the OpenFOAM standard S-A model.

-Spalart-Allmaras with ROTATION/CURVATURE CORRECTION: Detailed in Zhang and Yang (2013), this model utilizes the Richardson number to sensitize the S-A to rotation and curvature (R/C), while bypassing the significant additional computation required by standard S-A R/C correction models. The more efficient model is shown in the paper to provide almost identical results to the traditional model in several test cases.

-And others... take a look!

Tuesday, August 6, 2013

Writing an Incompressible Flow Solver

To all that have requested help from me directly by sending messages I hope your issues are resolved. Sorry if I never got back to you and missed your message.

For the past few months I have been enthusiastically writing my own CFD code. It is an incompressible Navier-Stokes (INS) solver. The two reasons why I decided to write my own code instead of using OpenFOAM are the following:

1. Nested Sliding Interface Capability.

2. Time Accuracy.

I detail these reasons in the following paragraphs.

The First Reason

OpenFOAM AMI/GGI is hard-coded to handle only one rotating region. Users however have figured out a way to use more than one in the same simulation (it is somewhere on the CFD-online forums). However, to my knowledge there is no way to nest them. What I mean by nesting is putting one rotating region inside of another. AFAIK Star-CCM+ is capable of this, but that is out of my budget (which is non-existent), and the second reason I listed above still remains a problem.

The Second Reason

You would think that compared to the compressible NS (CNS) equations, the incompressible would be easier, since you get to make the assumption that density does not change and set all of the density derivatives to zero. It may be a simplifying assumption to some degree, but in fact, the solution method for the INS is less straightforward. With the CNS, the equation of state (pressure, density, temperature equation) closes the equation set. With the INS, you can no longer use this closing equation. What most people utilize now to address this is a bit of a dirty solution: Operator Splitting. Without the equation of state, there is no explicit equation for pressure, but it appears in the momentum equations of the INS. The widely employed dirty solution is to split the equations so you solve for approximate pressure and approximate velocity and have them relate to each other to satisfy continuity. As you might guess, this has been shown to reduce the temporal order of accuracy of whatever scheme you use. Temporal accuracy is especially important for turbomachinery-type simulations.

There is a way to solve for the INS without operator splitting, by utilizing vorticity formulations. However, these methods have major practicality drawbacks. These difficulties include specifying boundary conditions and complications that arise in three dimensions.

After some research I came across the Exact Fractional Step (EFS) Method by J.B. Perot. With this method you can solve for the INS exactly, because pressure is eliminated. Furthermore, primitive variables are used, including in the specification of boundary conditions. Basically the biggest issues of both the vorticity formulations and traditional operator-splitting methods are fixed, while retaining their relative advantages. To me it seems like this is THE way to solve the INS. Why is it not already widely used? Well according to Dr. Perot, whom I have contacted personally by email, is that humans are creatures of habit. Ha ha. Another reason I can immediately think of is that a particular arrangement of flow quantities is required. I am using a staggered variable arrangement, where velocity components are at the cell edges, and scalar quantities (such as pressure or turbulence variables) are stored at cell centers. The staggered method is not as common in CFD solvers as co-located arrangements, where all velocity components and scalar quantities are stored at the nodes, due to the fact that staggered arrangements are less straightforward to accurately transform in structured grids. As I understand, using staggered arrangements in unstructured grids is a topic of research of Dr. Perot's. I forgot to mention that I am using structured grids in my code and am only interested in them, as opposed to unstructured grids. Anyways, I did find papers by Pieter Wesseling, who is now retired and has a textbook published on CFD, that shows how to transform staggered arrangements for good accuracy with relate ease and practicality.

Status

So far I have implemented a laminar multiblock structured grid explicit solver and tested it on a lid-driven cavity simulation. The results are good. Right now, I am in the finishing stages of implementing a Spalart-Allmaras turbulence model. I hope to soon run and post results of a high-Re airfoil simulation.

Although it is fully explicit, I still have to invert a system (i.e. solve a matrix Ax=b problem) as part of the EFS. As a side note, traditional operator-splitting methods also have to always invert a system for the pressure, and the error is in continuity. However for the EFS, the error is in vorticity, and the continuity is always satisfied to machine precision. It stands to reason that continuity error is more detrimental than vorticity error.

Right now, I am using a canned Conjugate-Gradients (CG) algorithm by Richard Shewchuk as the solver. I plan to in the distant future to implement this algorithm on the GPU, and CG seems well-suited to this. I am also interested in Algebraic Multigrid Preconditioning, which tends to reduce number of iterations by an order of magnitude. But until all of the desired solver features are done, vanilla CG will be good enough.

This has been quite a journey and I have learned very much. Thanks for reading.

For the past few months I have been enthusiastically writing my own CFD code. It is an incompressible Navier-Stokes (INS) solver. The two reasons why I decided to write my own code instead of using OpenFOAM are the following:

1. Nested Sliding Interface Capability.

2. Time Accuracy.

I detail these reasons in the following paragraphs.

The First Reason

OpenFOAM AMI/GGI is hard-coded to handle only one rotating region. Users however have figured out a way to use more than one in the same simulation (it is somewhere on the CFD-online forums). However, to my knowledge there is no way to nest them. What I mean by nesting is putting one rotating region inside of another. AFAIK Star-CCM+ is capable of this, but that is out of my budget (which is non-existent), and the second reason I listed above still remains a problem.

The Second Reason

You would think that compared to the compressible NS (CNS) equations, the incompressible would be easier, since you get to make the assumption that density does not change and set all of the density derivatives to zero. It may be a simplifying assumption to some degree, but in fact, the solution method for the INS is less straightforward. With the CNS, the equation of state (pressure, density, temperature equation) closes the equation set. With the INS, you can no longer use this closing equation. What most people utilize now to address this is a bit of a dirty solution: Operator Splitting. Without the equation of state, there is no explicit equation for pressure, but it appears in the momentum equations of the INS. The widely employed dirty solution is to split the equations so you solve for approximate pressure and approximate velocity and have them relate to each other to satisfy continuity. As you might guess, this has been shown to reduce the temporal order of accuracy of whatever scheme you use. Temporal accuracy is especially important for turbomachinery-type simulations.

There is a way to solve for the INS without operator splitting, by utilizing vorticity formulations. However, these methods have major practicality drawbacks. These difficulties include specifying boundary conditions and complications that arise in three dimensions.

After some research I came across the Exact Fractional Step (EFS) Method by J.B. Perot. With this method you can solve for the INS exactly, because pressure is eliminated. Furthermore, primitive variables are used, including in the specification of boundary conditions. Basically the biggest issues of both the vorticity formulations and traditional operator-splitting methods are fixed, while retaining their relative advantages. To me it seems like this is THE way to solve the INS. Why is it not already widely used? Well according to Dr. Perot, whom I have contacted personally by email, is that humans are creatures of habit. Ha ha. Another reason I can immediately think of is that a particular arrangement of flow quantities is required. I am using a staggered variable arrangement, where velocity components are at the cell edges, and scalar quantities (such as pressure or turbulence variables) are stored at cell centers. The staggered method is not as common in CFD solvers as co-located arrangements, where all velocity components and scalar quantities are stored at the nodes, due to the fact that staggered arrangements are less straightforward to accurately transform in structured grids. As I understand, using staggered arrangements in unstructured grids is a topic of research of Dr. Perot's. I forgot to mention that I am using structured grids in my code and am only interested in them, as opposed to unstructured grids. Anyways, I did find papers by Pieter Wesseling, who is now retired and has a textbook published on CFD, that shows how to transform staggered arrangements for good accuracy with relate ease and practicality.

Status

So far I have implemented a laminar multiblock structured grid explicit solver and tested it on a lid-driven cavity simulation. The results are good. Right now, I am in the finishing stages of implementing a Spalart-Allmaras turbulence model. I hope to soon run and post results of a high-Re airfoil simulation.

Although it is fully explicit, I still have to invert a system (i.e. solve a matrix Ax=b problem) as part of the EFS. As a side note, traditional operator-splitting methods also have to always invert a system for the pressure, and the error is in continuity. However for the EFS, the error is in vorticity, and the continuity is always satisfied to machine precision. It stands to reason that continuity error is more detrimental than vorticity error.

Right now, I am using a canned Conjugate-Gradients (CG) algorithm by Richard Shewchuk as the solver. I plan to in the distant future to implement this algorithm on the GPU, and CG seems well-suited to this. I am also interested in Algebraic Multigrid Preconditioning, which tends to reduce number of iterations by an order of magnitude. But until all of the desired solver features are done, vanilla CG will be good enough.

This has been quite a journey and I have learned very much. Thanks for reading.

Thursday, November 22, 2012

Boundary Conditions For an Open Wind Tunnel

An open wind tunnel is a wind tunnel that takes in air from the surroundings, and outputs it to the surroundings. This is in contrast to a closed wind tunnel, which recirculates the air (i.e. the outlet is connected to the inlet). The difference is mainly power savings.

This article will describe the boundary conditions necessary to simulate an open wind tunnel.

An important thing to realize is that static pressure in a wind tunnel is not constant from upstream to downstream, as it is for an actual vehicle in flight. The small but significant pressure gradient is required to create a flow and is created by the fan powering the wind tunnel (fans produce a pressure gradient, which is how they provide thrust).

Often in aerodynamics, because the air is invisible, many flow properties are indirectly measured. Wind tunnel velocity is typically measured by dynamic pressure, which is the additional pressure generated when a moving flow comes to a halt. For incompressible flows (below about Mach 0.3), the dynamic pressure can be calculated by 0.5*density*velocity^2.

Knowing this we can set up proper boundary conditions for an open wind tunnel. The following are the boundary conditions for U (velocity) and p (pressure). Set the desired wind tunnel velocity to V.

Walls: U = 0, p = zero gradient

Inlet: U = zero gradient, p = zero gradient, p0 (total pressure) = 1/2*density*V^2

Outlet: U = zero gradient, p = 0

This article will describe the boundary conditions necessary to simulate an open wind tunnel.

An important thing to realize is that static pressure in a wind tunnel is not constant from upstream to downstream, as it is for an actual vehicle in flight. The small but significant pressure gradient is required to create a flow and is created by the fan powering the wind tunnel (fans produce a pressure gradient, which is how they provide thrust).

Often in aerodynamics, because the air is invisible, many flow properties are indirectly measured. Wind tunnel velocity is typically measured by dynamic pressure, which is the additional pressure generated when a moving flow comes to a halt. For incompressible flows (below about Mach 0.3), the dynamic pressure can be calculated by 0.5*density*velocity^2.

Knowing this we can set up proper boundary conditions for an open wind tunnel. The following are the boundary conditions for U (velocity) and p (pressure). Set the desired wind tunnel velocity to V.

Walls: U = 0, p = zero gradient

Inlet: U = zero gradient, p = zero gradient, p0 (total pressure) = 1/2*density*V^2

Outlet: U = zero gradient, p = 0

Sunday, November 11, 2012

Getting Started with GPU Coding: CUDA 5.0

Hello everyone, this is a quick post detailing how to get started with harnessing the potential of your GPU via CUDA. Of course, I am planning to use it for OpenFOAM; I want to translate the pimpleDyMFoam solver for CUDA.

Especially awesome about CUDA 5.0 is the new Nsight IDE for Eclipse. If you have ever programmed with Eclipse (I have in Java), you know how spoiled you can get with all of the real-time automated correction and prediction.

Anyways, here are the steps I took:

I have Ubuntu 12.04, and even though the CUDA toolkit web page has only downloads for Ubuntu 11.10 and 10.04, it really does not matter. I used 11.10 without a hitch.

Download the CUDA toolkit at:

https://developer.nvidia.com/cuda-downloads

Before proceeding make sure you have all required packages:

Then go to the directory of the download in a terminal. Then type (without the angle brackets):

chmod +x <whatever the name of the download is>

sudo ./<whatever the name of the download is>

If you get an error of some sort like I did, type:

And try the 'sudo ...' command again.

The 'sudo ./...' command actually installs three packages: driver, toolkit, and samples. I actually installed the nvidia accelerated graphics driver separately via:

But if the included driver installation works for you, then by all means continue.

Now, add to ~/.bashrc by:

gedit ~/.bashrc

Scroll down to bottom of file and add:

export PATH=/usr/local/cuda-5.0/bin:$PATH

export LD_LIBRARY_PATH=/usr/local/cuda-5.0/lib64:/usr/local/cuda-5.0/lib:$LD_LIBRARY_PATH

Now to test everything out, go to your home directory:

cd ~/

Then go to the cuda samples folder (type 'ls' to view contents).

Once in the samples folder type 'make'. This will compile the sample codes, which may take a while. If you get an error (something like 'cannot find -lcuda', like I did), then type:

Especially awesome about CUDA 5.0 is the new Nsight IDE for Eclipse. If you have ever programmed with Eclipse (I have in Java), you know how spoiled you can get with all of the real-time automated correction and prediction.

Anyways, here are the steps I took:

I have Ubuntu 12.04, and even though the CUDA toolkit web page has only downloads for Ubuntu 11.10 and 10.04, it really does not matter. I used 11.10 without a hitch.

Download the CUDA toolkit at:

https://developer.nvidia.com/cuda-downloads

Before proceeding make sure you have all required packages:

sudo apt-get install freeglut3-dev build-essential libx11-dev libxmu-dev libxi-dev libgl1-mesa-glx libglu1-mesa libglu1-mesa-dev Then go to the directory of the download in a terminal. Then type (without the angle brackets):

chmod +x <whatever the name of the download is>

sudo ./<whatever the name of the download is>

If you get an error of some sort like I did, type:

sudo ln -s /usr/lib/x86_64-linux-gnu/libglut.so /usr/lib/libglut.s And try the 'sudo ...' command again.

The 'sudo ./...' command actually installs three packages: driver, toolkit, and samples. I actually installed the nvidia accelerated graphics driver separately via:

sudo apt-add-repository ppa:ubuntu-x-swat/x-updates

sudo apt-get update

sudo apt-get install nvidia-currentBut if the included driver installation works for you, then by all means continue.

Now, add to ~/.bashrc by:

gedit ~/.bashrc

Scroll down to bottom of file and add:

export PATH=/usr/local/cuda-5.0/bin:$PATH

export LD_LIBRARY_PATH=/usr/local/cuda-5.0/lib64:/usr/local/cuda-5.0/lib:$LD_LIBRARY_PATH

Now to test everything out, go to your home directory:

cd ~/

Then go to the cuda samples folder (type 'ls' to view contents).

Once in the samples folder type 'make'. This will compile the sample codes, which may take a while. If you get an error (something like 'cannot find -lcuda', like I did), then type:

sudo ln -s /usr/lib/nvidia-current/libcuda.so /usr/lib/libcuda.so

Then go into the sample files and run the executables (whatever catches your fancy)! Executables are colored green if you view the file contents via 'ls', and you run the executables by typing './' before the name of the file.

Tuesday, August 14, 2012

OpenFOAM 2.1.1 GGI / AMI Parallel Efficiency Test, Part II

These figures are pretty self-explanatory. Again, these are on single computers. My i7-2600 only has four cores, and the other data set represents a computer with 8 cores (2 x Opteron 2378). It is clear there is a significant parallelizability bottleneck (serial component) in the AMI. However, it seems this bottleneck may be controllable by minimizing AMI interface path face count, if the interface patch interpolation is a serial component.

Sunday, August 12, 2012

Connecting Two Posts: AMI Parallelizability and Hyperthreading Investiagtion

http://lordvon64.blogspot.com/2012/08/openfoam-211-ggi-ami-parallel.html

http://lordvon64.blogspot.com/2011/01/hyperthreading-and-cfd.html

The most recent post shows that parallel efficiency stays constant with any more than one core with AMI. In an older post testing the effect of hyperthreading, efficiency went down, instead of staying constant. These results are still consistent, because the two tests were fundamentally different, one obviously using hyperthreading and the other using true parallelism. Hyperthreading makes more virtual cores, but with less physical resources per core (makes sense; you cannot get something for nothing). Since we hypothesized that the lower efficiency is due to serial component, having weaker cores will lead to a slower overall simulation, since the serial component (which can only be run on one processor) will have less resources. Thus the lower performance using hyperthreading is due to the serial component running on a weaker single core. Also, the software could have made a difference as that test was run with GGI on OpenFOAM 1.5-dev.

This has practical implications for building / choosing a computer to use with AMI simulations. One would want to choose a processor with the strongest single-core performance. Choosing a processor with larger number of cores, but weaker single cores would have a much more detrimental effect in AMI parallel simulations than one would think without considering this post. This is actually applicable to all simulations as there is always some serial component, but the lower the parallel efficiency, the more important the aforementioned guideline is.

http://lordvon64.blogspot.com/2011/01/hyperthreading-and-cfd.html

The most recent post shows that parallel efficiency stays constant with any more than one core with AMI. In an older post testing the effect of hyperthreading, efficiency went down, instead of staying constant. These results are still consistent, because the two tests were fundamentally different, one obviously using hyperthreading and the other using true parallelism. Hyperthreading makes more virtual cores, but with less physical resources per core (makes sense; you cannot get something for nothing). Since we hypothesized that the lower efficiency is due to serial component, having weaker cores will lead to a slower overall simulation, since the serial component (which can only be run on one processor) will have less resources. Thus the lower performance using hyperthreading is due to the serial component running on a weaker single core. Also, the software could have made a difference as that test was run with GGI on OpenFOAM 1.5-dev.

This has practical implications for building / choosing a computer to use with AMI simulations. One would want to choose a processor with the strongest single-core performance. Choosing a processor with larger number of cores, but weaker single cores would have a much more detrimental effect in AMI parallel simulations than one would think without considering this post. This is actually applicable to all simulations as there is always some serial component, but the lower the parallel efficiency, the more important the aforementioned guideline is.

Saturday, August 11, 2012

OpenFOAM 2.1.1 GGI / AMI Parallel Efficiency Test

A 2D incompressible turbulent transient simulation featuring rotating impellers was performed on a varying number of processors to test the parallel capability of the AMI feature in OpenFOAM. The size of the case was small, about 180,000 cells total. This ensures that memory would not be a bottleneck. The exact same case was run on different numbers of processors. The computer used had 2 x AMD Opteron 2378 (2.4 MHz), for a total of 8 cores. I am not sure what CPU specs are relevant in this case, so you can look it up. Let me know what other specs are relevant and why in the comments.

The following are the results:

The efficiency is the most revealing metric here. There seems to be a serial component to the AMI bottle necking the performance. I will post some more results done on a different computer shortly.

The following are the results:

The efficiency is the most revealing metric here. There seems to be a serial component to the AMI bottle necking the performance. I will post some more results done on a different computer shortly.

Thursday, May 24, 2012

Conjugate Heat Transfer in Fluent

This post is just a few tips on how to do a conjugate heat transfer simulation in ANSYS Fluent, for someone who knows how to run a simple aerodynamic case (basically know your way around the GUI), for which there are plenty of tutorials online.

So more specifically we'll take the case of a simple metal block at a high temperature at t=0, and airflow around it, cooling it over time. So there are two regions, a fluid and solid region.

You must set the boundary conditions correctly so that at the physical wall, there is a boundary defined for the solid and the fluid. These walls will be then coupled. There are probably different ways to do it properly in different meshing programs, and maybe even different ways in certain circumstances. In my case I used Pointwise and ignoring the boundary and merely defining the fluid and solid zones was sufficient. When imported into Fluent, Fluent automatically makes boundaries at the physical wall between the two zones and you can couple them via 'Define > Mesh Interfaces'.

You set the initial temperature of your material by going to 'Patch' under 'Solution > Initial Conditions'. Select the solid zone and that you want to set initial temperature and enter your value.

Those were the two things that for me were not straightforward.

So more specifically we'll take the case of a simple metal block at a high temperature at t=0, and airflow around it, cooling it over time. So there are two regions, a fluid and solid region.

You must set the boundary conditions correctly so that at the physical wall, there is a boundary defined for the solid and the fluid. These walls will be then coupled. There are probably different ways to do it properly in different meshing programs, and maybe even different ways in certain circumstances. In my case I used Pointwise and ignoring the boundary and merely defining the fluid and solid zones was sufficient. When imported into Fluent, Fluent automatically makes boundaries at the physical wall between the two zones and you can couple them via 'Define > Mesh Interfaces'.

You set the initial temperature of your material by going to 'Patch' under 'Solution > Initial Conditions'. Select the solid zone and that you want to set initial temperature and enter your value.

Those were the two things that for me were not straightforward.

Wednesday, May 2, 2012

GPU-accelerated OpenFOAM: Part 1

In the process of installing Cufflink (the thing that allows GPU acceleration on OpenFOAM) on my computer, there were a few caveats, especially for the green Linux user such as myself. Most of these issues were resolved quite simply by internet searches.

One issue I would like to document is the lack of CUDA toolkit 4.0. There is an entry in the NVIDIA archive, but no file to download (???). Turns out you can use 4.1 (maybe even 4.2, but I did not test it), but you have to use the older version of Thrust, which you can find on the Google code page. Simply replace the one in /usr/local/cuda/include (1.5) with 1.4, and you will be able to run the testcg.cu file on the Cufflink repository.

So I finally got cusp, thrust, and cuda to work. Onwards...

Tuesday, April 17, 2012

OpenFOAM 1.6-ext and GPU CFD

There has been some recent developments in application of GPUs (graphics processor units) for scientific computing. Only has the prominent CUDA language for programming for GPUs been released in 2006. GPU computing has been shown to offer an order of magnitude lower wall clock run times, at an order of magnitude of the price! It seems like the computing solution of the future for CFD at the very least! Its obstacle to widespread use has been in software implementation. But this is an obstacle that is fading away.

Here is a test case run by someone at the CFD-online forums:

So I was eager to buy a graphics card for $120 (GTX 550 Ti) and install OpenFOAM 1.6-ext, since Cufflink only works on 1.6-ext (I have been using 1.5-dev). There are quite a bit of differences, but it did not take me long to figure it out. The biggest difference is that 1.5-dev's GGI implementation uses PISO, and 1.6-ext uses PIMPLE. I could only get stability using smoothSolver for the turbulence quantities when using the kOmegaSST model (I only used smoothSolver in 1.5-dev as well because it was the only parallel-compatible solver I think, which may still be the case here...).

Looking forward to using the cutting-edge in software and computational power. Thanks to all the open source CUDA developers.

A note about GMSH scripting

This is about a frustrating detail that took me a few hours to discover. Scripting in GMSH allows one flexibility in design. The parameterization of the mesh allows for quick redesign. As objects can become complex rather quickly, when scripting in GMSH it may be helpful to make 'functions'. I say 'functions' because these are parameter-less and act as though you copy and pasted the function where you called it. So it is very primitive.

This caveat that took me some time to discover was that you have to be sure that calling a function is the last thing you do on a line, and do not have multiple calls in one line. Only after a line break does the program refresh the variables and such.

Sunday, April 1, 2012

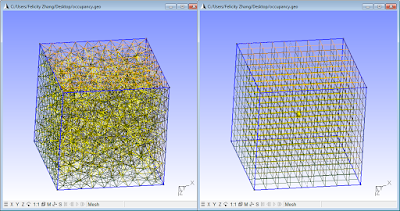

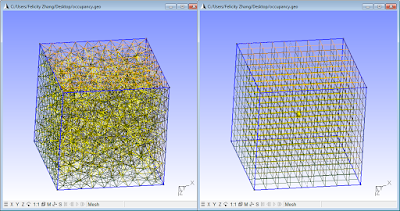

Unstructured vs. structured mesh occupancy

Hello all, this will be a quick post about how many cells it takes to fill a volume using unstructured cells and structured cells. This would presumably give some indication of how much computational work increases when using an unstructured mesh.

We will fill a simple cube of dimension 1 with cells of characteristic length 0.1, in gmsh (open source, free). I have included below the gmsh (.geo) script used to generate these meshes so that you may play around with the numbers. Here are is a visual comparison:

The results:

Unstructured: 1225 vertices, 6978 elements.

Structured: 1000 vertices, 1331 elements.

Meshing only the faces for a 2D comparison:

Unstructured: 593 vertices, 1297 elements.

Structured: 489 vertices, 603 elements.

So now I am a bit confused about vertices vs. elements. I would have thought 2D elements means 9*9*6 (9 for each dimension, 6 faces) = 486, which is darn close to the vertices count. Hmm if anyone knows please post, and if not in the meantime maybe I will get to updating this.

Interesting fact, I guess there may be some pseudorandomness in the mesh generation; reloading the file and remeshing gave slightly different results. Another element count I got for unstructured 3D meshing was 6624.

The script:

transfinite = 1;//toggle 0 or 1, for unstructured or structured, respectively.

cellLength = 0.1;

cubeLength = 1;

Point(1) = {0,0,0,cellLength};Point(2) = {0,0,cubeLength,cellLength};

Point(3) = {0,cubeLength,0,cellLength};Point(4) = {0,cubeLength,cubeLength,cellLength};

Point(5) = {cubeLength,0,0,cellLength};Point(6) = {cubeLength,0,cubeLength,cellLength};

Point(7) = {cubeLength,cubeLength,0,cellLength};Point(8) = {cubeLength,cubeLength,cubeLength,cellLength};

Point(9) = {0,0,0,cellLength};

Line(1) = {8, 7};

Line(2) = {7, 5};

Line(3) = {5, 6};

Line(4) = {6, 8};

Line(5) = {8, 4};

Line(6) = {4, 2};

Line(7) = {2, 6};

Line(8) = {3, 7};

Line(9) = {3, 4};

Line(10) = {3, 1};

Line(11) = {1, 2};

Line(12) = {1, 5};

Line Loop(13) = {5, -9, 8, -1};

Plane Surface(14) = {13};

Line Loop(15) = {10, 11, -6, -9};

Plane Surface(16) = {15};

Line Loop(17) = {7, 4, 5, 6};

Plane Surface(18) = {17};

Line Loop(19) = {3, 4, 1, 2};

Plane Surface(20) = {19};

Line Loop(21) = {8, 2, -12, -10};

Plane Surface(22) = {21};

Line Loop(23) = {3, -7, -11, 12};

Plane Surface(24) = {23};

Surface Loop(25) = {22, 14, 18, 24, 20, 16};

Volume(26) = {25};

If(transfinite==1)

Transfinite Line {1:12} = cubeLength/cellLength;

Transfinite Surface {14,16,18,20,22,24};

Recombine Surface{14,16,18,20,22,24};

Transfinite Volume {26};

EndIf

Tuesday, March 6, 2012

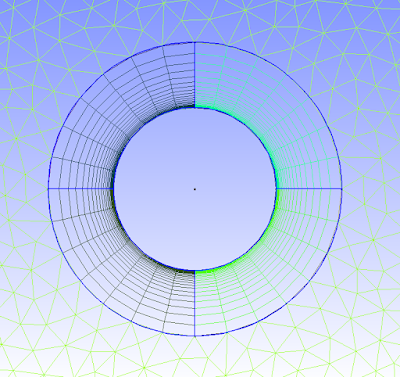

Hybrid structured/unstructured meshing with Gmsh

I recently became aware of Gmsh's awesome capability to create a hybrid structured-unstructured mesh.

If you are not familiar with these mesh types, unstructured is the one that can be quickly generated around a complex body with the click of a button. The cells turn out triangular in 2D (usually, and can be 4-sided or more) and usually pyramidal in 3D. Structured meshes are made by specifying point locations on an outer rectangular block. Structured mesh cells turn out to be square / rectangular in 2D and rectangular prismatic in 3D. Structured meshes usually take more time than unstructured.

So why use structured? One important flow phenomenon that cannot be efficiently captured by unstructured meshes is boundary layer separation. For drag simulations, predicting boundary layer separation is key. With structured meshes the cells can have a high aspect ratio to have small spacing normal to the wall to resolve the large velocity gradient, and larger spacing parallel to the wall, in which pressure/velocity gradient is not as huge. Unstructured meshes maintain roughly an equilateral shape, and stretching out unstructured mesh cells results in bad mesh quality in terms of skew. So to get boundary-layer resolution with unstructured would take many more cells than with structured cells; structured cells basically allow you to be more efficient with the cells you use.

So many CFD'ers use both unstructured and structured meshes; unstructured as the default, and structured in special areas of interest. Below are a few pictures of part of a hybrid mesh I created in Gmsh (free) for a 2D simulation in OpenFOAM (also free), and it worked perfectly in parallel simulation.

There are tutorial files on the Gmsh website that show you how to do this easily. Look at tutorial file t6.geo. Good luck!

Saturday, December 3, 2011

Parallel efficiency tests

Hello, today I bring the results of some quick parallel efficiency tests. I run on each of three computers two identical cases: one using a single core and another using 4 cores. To get an efficiency factor, I divide the time it took to run the 4-core case by 4 times the time it took to run the case on a single core. In the best case the result is 1, meaning there was no waste in multiple-core communication/overhead. But... we see this is not the case:

As you can see, the results are quite bad. I am not sure what causes this inefficiency. One reason I have seen floating around the internets (both the good and bad internets) is that the primary cache (L1?) size severely limits this. I have also seen that the secondary and below cache (L2, L3) sizes are not very useful in this application at least.

As you can see, the results are quite bad. I am not sure what causes this inefficiency. One reason I have seen floating around the internets (both the good and bad internets) is that the primary cache (L1?) size severely limits this. I have also seen that the secondary and below cache (L2, L3) sizes are not very useful in this application at least.

Computer 1 has an i7-2600 core, Computer 2 has an i5-750, and Computer 3 has a different i5 (Q9550). The memory is more than enough (verified by looking at system monitor). I used OpenFoam 1.5-dev with generalized grid interface (GGI, rotor in the mesh).

Given these results my impetus to cluster computers together has died. Haha.

Thursday, November 10, 2011

Long time, no post: CFD and Carbon Fiber Prototyping

It's not that I have been on a project hiatus, I have been hard at work with CFD simulations, researching a possible invention! So I do not want to post everything I have done just yet. But I will be posting the physical prototype I have referred to in a past post, made of water-jet-cut aluminum plate, driven by a ~600W brushless motor. It was made to validate CFD cases, and I gained some insight from it. I have also made a YouTube video of my first FanWing CFD case public. It was done a long while ago.

I have been working on clustering computers, that is connecting by ethernet cable more than one desktop together and using their processors to work on one case together. I am doing it on Ubuntu 10.04LTS and using OpenFOAM 1.5-dev. I will have results shortly. Useful link: Clustering computers

I am now investigating building carbon fiber models, and I will see if I can do myself rather than have a prototyping service do it.

I have been working on clustering computers, that is connecting by ethernet cable more than one desktop together and using their processors to work on one case together. I am doing it on Ubuntu 10.04LTS and using OpenFOAM 1.5-dev. I will have results shortly. Useful link: Clustering computers

I am now investigating building carbon fiber models, and I will see if I can do myself rather than have a prototyping service do it.

Wednesday, February 16, 2011

Putting together a prototype/validation for CFD...

This is my second foray into building something mechanical, my previous venture utilizing only junkyard parts to build a testing platform for something similar to the Fanwing last summer (this is before I even touched CFD, and is incidentally why I picked up CFD). It ended up weighing 80 pounds, using a 5hp motor I had used in 8th grade to power a massive, hot roller mill for making rubber bands for a science fair project (10+ ingredient chemical recipes, fun times) and v-belt transmission for a cross-flow-fan test section of about a foot in diameter and less than 2 feet in span (picture of it here maybe if i remember). I guess it ended up not being very useful, but for the amount of metal-working and welding I had to do, I have at least a modicum of pride in it.

Anyways this time I used all of the internets to find non-junkyard parts for use in conjunction with a water jet cutter, which is awesome and I now have access to, to build a prototype for CFD validation. The design in Autodesk Inventor (which, unlike SolidEdge ST3, allows easy use and integration of variables for dimensions so you do not have to redo your whole design if you need changes in a few parts) looks very tight and I am excited to build it.

Anyways, I always post to share something that I think may be useful. For this post, it is some vendors in case you are planning to build something:

Main vendors:

- McMaster-Carr (Everything mechanical)

- Hobby King (Motors, batteries, etc.)

Places that had stuff that the main vendors did not have:

- SDP SI (Has a whole lot of mechanical stuff, but I used them for their extensive variety of timing belt pulleys)

- Fastenal (For 4-40 square nuts, and if you do not want to buy set screws or square nuts, etc. by the 100's or more)

- Digikey (Angle brackets; usually an electronics supplier; I bought PIC microcontrollers from these guys before; Use google to search their stuff)

Although this post feels pretty unsubstantial, it is but the calm before the storm of substance, if you will. I will be posting more about my mechatronic CFD validation, uhh... machine.

Anyways this time I used all of the internets to find non-junkyard parts for use in conjunction with a water jet cutter, which is awesome and I now have access to, to build a prototype for CFD validation. The design in Autodesk Inventor (which, unlike SolidEdge ST3, allows easy use and integration of variables for dimensions so you do not have to redo your whole design if you need changes in a few parts) looks very tight and I am excited to build it.

Anyways, I always post to share something that I think may be useful. For this post, it is some vendors in case you are planning to build something:

Main vendors:

- McMaster-Carr (Everything mechanical)

- Hobby King (Motors, batteries, etc.)

Places that had stuff that the main vendors did not have:

- SDP SI (Has a whole lot of mechanical stuff, but I used them for their extensive variety of timing belt pulleys)

- Fastenal (For 4-40 square nuts, and if you do not want to buy set screws or square nuts, etc. by the 100's or more)

- Digikey (Angle brackets; usually an electronics supplier; I bought PIC microcontrollers from these guys before; Use google to search their stuff)

Although this post feels pretty unsubstantial, it is but the calm before the storm of substance, if you will. I will be posting more about my mechatronic CFD validation, uhh... machine.

Tuesday, January 25, 2011

Hyperthreading and CFD

So I just performed a little experiment testing effect of Intel's "hyperthreading" (HT) technology on CFD solver speed. HT, or as some have called it, "hype threading", increases multitasking performance by trying to more efficiently allocate processor resources. One HT core is seen as two cores by the computer.

So I would expect that CFD solver speed would not benefit from HT, as opposed to disabling HT. My computer contains an i7 2600, a four-core processor. I decomposed the HT off case into four domains (one for each core), and of course I decomposed the HT On case into eight domains (one for each virtual core). The usage of all of the processors at 100% and that the right number of cores was perceived by the OS was verified by checking Ubuntu's System Monitor application. HT was enabled and disabled in BIOS.

In OpenFOAM, running the same exact case, the run times for HT on and off were:

HT On (8 virtual cores): 0.0692875 simulation seconds in 6213 real-life seconds.

HT Off (4 cores): 0.0692822 simulation seconds in 5154 real-life seconds.

So, as expected, HT does not give performance gain, but in fact imparts performance loss. We should expect similar performance because it is the same hardware, and computational efficiency usually decreases on a per-core basis with more cores (time is wasted in inter-core communication, etc.). Also, we see that the processor performs as expected (as four normal cores) with HT disabled.

Of course, I am not saying anything about HT's usefulness for multitasking, which is what it was designed for.

So I would expect that CFD solver speed would not benefit from HT, as opposed to disabling HT. My computer contains an i7 2600, a four-core processor. I decomposed the HT off case into four domains (one for each core), and of course I decomposed the HT On case into eight domains (one for each virtual core). The usage of all of the processors at 100% and that the right number of cores was perceived by the OS was verified by checking Ubuntu's System Monitor application. HT was enabled and disabled in BIOS.

In OpenFOAM, running the same exact case, the run times for HT on and off were:

HT On (8 virtual cores): 0.0692875 simulation seconds in 6213 real-life seconds.

HT Off (4 cores): 0.0692822 simulation seconds in 5154 real-life seconds.

So, as expected, HT does not give performance gain, but in fact imparts performance loss. We should expect similar performance because it is the same hardware, and computational efficiency usually decreases on a per-core basis with more cores (time is wasted in inter-core communication, etc.). Also, we see that the processor performs as expected (as four normal cores) with HT disabled.

Of course, I am not saying anything about HT's usefulness for multitasking, which is what it was designed for.

Thursday, January 6, 2011

For those who want to start CFD (Computational Fluid Dynamics) simulations...

I started CFD in OpenFOAM with no prior experience in CFD. I did not even have the Navier-Stokes equations memorized or anything. Do not write it off as impossible without even trying, as I had.

A quick and useful introduction to CFD in general is available here.

Once you have some grasp of what CFD is about, you can move onto installing and using CFD programs. A great resource for all steps of the whole simulation process is CFD-online. This website was essential for enabling me to run my own simulations.

I would love to elaborate on whatever you cannot find in the two resources I described above. Please comment if you have any questions at all, and I will be sure to reply in a short time.

A quick and useful introduction to CFD in general is available here.

Once you have some grasp of what CFD is about, you can move onto installing and using CFD programs. A great resource for all steps of the whole simulation process is CFD-online. This website was essential for enabling me to run my own simulations.

I would love to elaborate on whatever you cannot find in the two resources I described above. Please comment if you have any questions at all, and I will be sure to reply in a short time.

Subscribe to:

Posts (Atom)